I agree with R. Pate and Todd Gardner; a std::set might be a good idea here. Even if you’re stuck using vectors, if you have enough duplicates, you might be better off creating a set to do the dirty work.

Let’s compare three approaches:

Just using vector, sort + unique

sort( vec.begin(), vec.end() ); vec.erase( unique( vec.begin(), vec.end() ), vec.end() );

Convert to set (manually)

set<int> s; unsigned size = vec.size(); for( unsigned i = 0; i < size; ++i ) s.insert( vec[i] ); vec.assign( s.begin(), s.end() );

Convert to set (using a constructor)

set<int> s( vec.begin(), vec.end() ); vec.assign( s.begin(), s.end() );

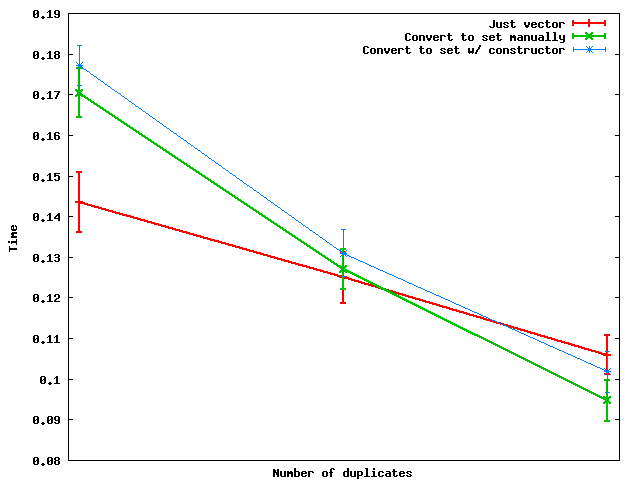

Here’s how these perform as the number of duplicates changes:

Summary: when the number of duplicates is large enough, it’s actually faster to convert to a set and then dump the data back into a vector.

And for some reason, doing the set conversion manually seems to be faster than using the set constructor — at least on the toy random data that I used.